Note

This page is a reference documentation. It only explains the function signature, and not how to use it. Please refer to the user guide for the big picture.

nilearn.datasets.fetch_localizer_contrasts#

- nilearn.datasets.fetch_localizer_contrasts(contrasts, n_subjects=None, get_tmaps=False, get_masks=False, get_anats=False, data_dir=None, url=None, resume=True, verbose=1, legacy_format=True)[source]#

Download and load Brainomics/Localizer dataset (94 subjects).

“The Functional Localizer is a simple and fast acquisition procedure based on a 5-minute functional magnetic resonance imaging (fMRI) sequence that can be run as easily and as systematically as an anatomical scan. This protocol captures the cerebral bases of auditory and visual perception, motor actions, reading, language comprehension and mental calculation at an individual level. Individual functional maps are reliable and quite precise. The procedure is described in more detail on the Functional Localizer page.” (see https://osf.io/vhtf6/)

You may cite 1 when using this dataset.

Scientific results obtained using this dataset are described in 2.

- Parameters

- contrastslist of str

The contrasts to be fetched (for all 94 subjects available). Allowed values are:

- "checkerboard" - "horizontal checkerboard" - "vertical checkerboard" - "horizontal vs vertical checkerboard" - "vertical vs horizontal checkerboard" - "sentence listening" - "sentence reading" - "sentence listening and reading" - "sentence reading vs checkerboard" - "calculation (auditory cue)" - "calculation (visual cue)" - "calculation (auditory and visual cue)" - "calculation (auditory cue) vs sentence listening" - "calculation (visual cue) vs sentence reading" - "calculation vs sentences" - "calculation (auditory cue) and sentence listening" - "calculation (visual cue) and sentence reading" - "calculation and sentence listening/reading" - "calculation (auditory cue) and sentence listening vs " - "calculation (visual cue) and sentence reading" - "calculation (visual cue) and sentence reading vs checkerboard" - "calculation and sentence listening/reading vs button press" - "left button press (auditory cue)" - "left button press (visual cue)" - "left button press" - "left vs right button press" - "right button press (auditory cue)" - "right button press (visual cue)" - "right button press" - "right vs left button press" - "button press (auditory cue) vs sentence listening" - "button press (visual cue) vs sentence reading" - "button press vs calculation and sentence listening/reading"

or equivalently on can use the original names:

- "checkerboard" - "horizontal checkerboard" - "vertical checkerboard" - "horizontal vs vertical checkerboard" - "vertical vs horizontal checkerboard" - "auditory sentences" - "visual sentences" - "auditory&visual sentences" - "visual sentences vs checkerboard" - "auditory calculation" - "visual calculation" - "auditory&visual calculation" - "auditory calculation vs auditory sentences" - "visual calculation vs sentences" - "auditory&visual calculation vs sentences" - "auditory processing" - "visual processing" - "visual processing vs auditory processing" - "auditory processing vs visual processing" - "visual processing vs checkerboard" - "cognitive processing vs motor" - "left auditory click" - "left visual click" - "left auditory&visual click" - "left auditory & visual click vs right auditory&visual click" - "right auditory click" - "right visual click" - "right auditory&visual click" - "right auditory & visual click vs left auditory&visual click" - "auditory click vs auditory sentences" - "visual click vs visual sentences" - "auditory&visual motor vs cognitive processing"

- n_subjectsint or list, optional

The number or list of subjects to load. If None is given, all 94 subjects are used.

- get_tmapsboolean, optional

Whether t maps should be fetched or not. Default=False.

- get_masksboolean, optional

Whether individual masks should be fetched or not. Default=False.

- get_anatsboolean, optional

Whether individual structural images should be fetched or not. Default=False.

- data_dir

pathlib.Pathorstr, optional Path where data should be downloaded. By default, files are downloaded in home directory.

- url

str, optional URL of file to download. Override download URL. Used for test only (or if you setup a mirror of the data). Default=None.

- resume

bool, optional Whether to resume download of a partly-downloaded file. Default=True.

- verbose

int, optional Verbosity level (0 means no message). Default=1.

- legacy_format

bool, optional If set to

True, the fetcher will return recarrays. Otherwise, it will return pandas dataframes. Default=True.

- Returns

- dataBunch

Dictionary-like object, the interest attributes are :

- ‘cmaps’: string list

Paths to nifti contrast maps

- ‘tmaps’ string list (if ‘get_tmaps’ set to True)

Paths to nifti t maps

- ‘masks’: string list

Paths to nifti files corresponding to the subjects individual masks

- ‘anats’: string

Path to nifti files corresponding to the subjects structural images

See also

References

- 1

Dimitri Papadopoulos Orfanos, Vincent Michel, Yannick Schwartz, Philippe Pinel, Antonio Moreno, Denis Le Bihan, and Vincent Frouin. The brainomics/localizer database. NeuroImage, 144:309–314, 2017. Data Sharing Part II. URL: https://www.sciencedirect.com/science/article/pii/S1053811915008745, doi:https://doi.org/10.1016/j.neuroimage.2015.09.052.

- 2

Philippe Pinel, Bertrand Thirion, Sébastien Meriaux, Antoinette Jobert, Julien Serres, Denis Le Bihan, Jean-Baptiste Poline, and Stanislas Dehaene. Fast reproducible identification and large-scale databasing of individual functional cognitive networks. BMC Neuroscience, 2007.

Examples using nilearn.datasets.fetch_localizer_contrasts#

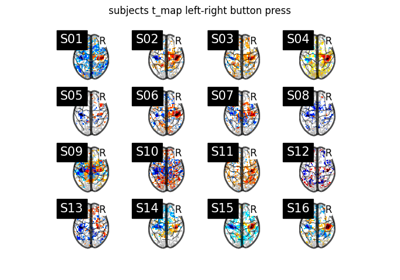

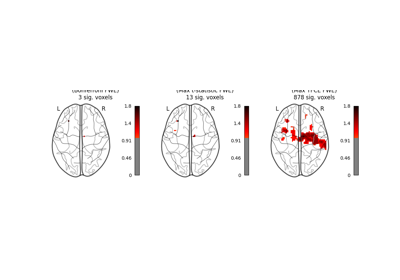

Massively univariate analysis of a motor task from the Localizer dataset