Note

This page is a reference documentation. It only explains the class signature, and not how to use it. Please refer to the user guide for the big picture.

nilearn.decoding.SearchLight#

- class nilearn.decoding.SearchLight(mask_img, process_mask_img=None, radius=2.0, estimator='svc', n_jobs=1, scoring=None, cv=None, verbose=0)[source]#

Implement search_light analysis using an arbitrary type of classifier.

- Parameters

- mask_imgNiimg-like object

See http://nilearn.github.io/manipulating_images/input_output.html boolean image giving location of voxels containing usable signals.

- process_mask_imgNiimg-like object, optional

See http://nilearn.github.io/manipulating_images/input_output.html boolean image giving voxels on which searchlight should be computed.

- radiusfloat, optional

radius of the searchlight ball, in millimeters. Defaults to 2.

- estimator‘svr’, ‘svc’, or an estimator object implementing ‘fit’

The object to use to fit the data

- n_jobs

int, optional. The number of CPUs to use to do the computation. -1 means ‘all CPUs’. Default=1.

- scoringstring or callable, optional

The scoring strategy to use. See the scikit-learn documentation If callable, takes as arguments the fitted estimator, the test data (X_test) and the test target (y_test) if y is not None.

- cvcross-validation generator, optional

A cross-validation generator. If None, a 3-fold cross validation is used or 3-fold stratified cross-validation when y is supplied.

- verbose

int, optional Verbosity level (0 means no message). Default=0.

Notes

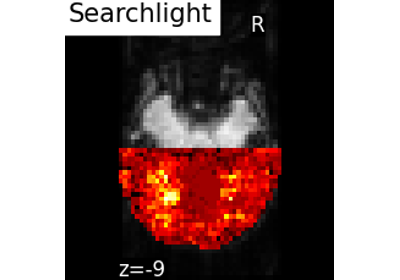

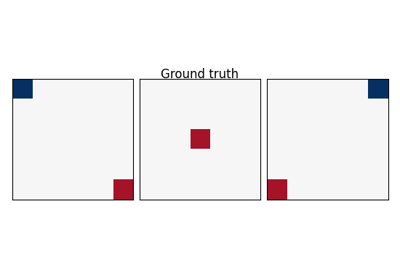

The searchlight [Kriegeskorte 06] is a widely used approach for the study of the fine-grained patterns of information in fMRI analysis. Its principle is relatively simple: a small group of neighboring features is extracted from the data, and the prediction function is instantiated on these features only. The resulting prediction accuracy is thus associated with all the features within the group, or only with the feature on the center. This yields a map of local fine-grained information, that can be used for assessing hypothesis on the local spatial layout of the neural code under investigation.

Nikolaus Kriegeskorte, Rainer Goebel & Peter Bandettini. Information-based functional brain mapping. Proceedings of the National Academy of Sciences of the United States of America, vol. 103, no. 10, pages 3863-3868, March 2006

- __init__(mask_img, process_mask_img=None, radius=2.0, estimator='svc', n_jobs=1, scoring=None, cv=None, verbose=0)[source]#

- fit(imgs, y, groups=None)[source]#

Fit the searchlight

- Parameters

- imgsNiimg-like object

See http://nilearn.github.io/manipulating_images/input_output.html 4D image.

- y1D array-like

Target variable to predict. Must have exactly as many elements as 3D images in img.

- groupsarray-like, optional

group label for each sample for cross validation. Must have exactly as many elements as 3D images in img. default None NOTE: will have no effect for scikit learn < 0.18

- get_params(deep=True)#

Get parameters for this estimator.

- Parameters

- deepbool, default=True

If True, will return the parameters for this estimator and contained subobjects that are estimators.

- Returns

- paramsdict

Parameter names mapped to their values.

- set_params(**params)#

Set the parameters of this estimator.

The method works on simple estimators as well as on nested objects (such as

Pipeline). The latter have parameters of the form<component>__<parameter>so that it’s possible to update each component of a nested object.- Parameters

- **paramsdict

Estimator parameters.

- Returns

- selfestimator instance

Estimator instance.