Note

This page is a reference documentation. It only explains the function signature, and not how to use it. Please refer to the user guide for the big picture.

nilearn.datasets.fetch_miyawaki2008#

- nilearn.datasets.fetch_miyawaki2008(data_dir=None, url=None, resume=True, verbose=1)[source]#

Download and loads Miyawaki et al. 2008 dataset (153MB).

See 1.

- Parameters

- data_dir

pathlib.Pathorstr, optional Path where data should be downloaded. By default, files are downloaded in home directory.

- url

str, optional URL of file to download. Override download URL. Used for test only (or if you setup a mirror of the data). Default=None.

- resume

bool, optional Whether to resume download of a partly-downloaded file. Default=True.

- verbose

int, optional Verbosity level (0 means no message). Default=1.

- data_dir

- Returns

- dataBunch

Dictionary-like object, the interest attributes are :

- ‘func’: string list

Paths to nifti file with bold data

- ‘label’: string list

Paths to text file containing session and target data

- ‘mask’: string

Path to nifti mask file to define target volume in visual cortex

- ‘background’: string

Path to nifti file containing a background image usable as a background image for miyawaki images.

Notes

This dataset is available on the brainliner website

References

- 1

Yoichi Miyawaki, Hajime Uchida, Okito Yamashita, Masa-aki Sato, Yusuke Morito, Hiroki C. Tanabe, Norihiro Sadato, and Yukiyasu Kamitani. Visual image reconstruction from human brain activity using a combination of multiscale local image decoders. Neuron, 60(5):915–929, 2008. URL: https://www.sciencedirect.com/science/article/pii/S0896627308009586, doi:https://doi.org/10.1016/j.neuron.2008.11.004.

Examples using nilearn.datasets.fetch_miyawaki2008#

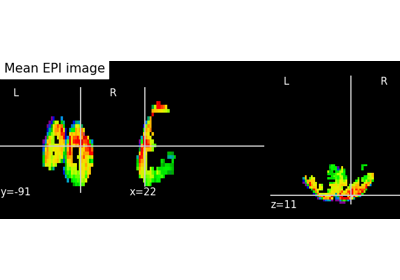

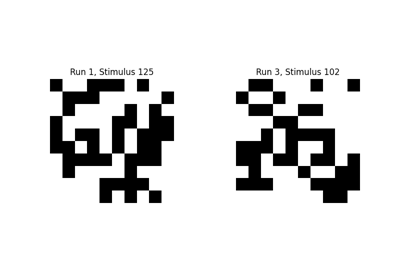

Encoding models for visual stimuli from Miyawaki et al. 2008

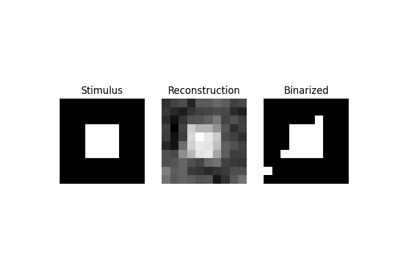

Reconstruction of visual stimuli from Miyawaki et al. 2008